Solving 'Your Session Crashed After Using All Available RAM' in Google Colab

😊 When working with Google Colab, a cloud-based Python execution environment, you might encounter session crashes if your code consumes all the allocated memory. Such scenarios could arise from having numerous variables or executing memory-intensive operations. To circumvent this, consider refining your code for better memory efficiency, choosing a Colab instance with more memory, or keeping an eye on your code's memory consumption to ensure it stays within the system's limits.

Resolving Common Memory Errors in Google Colab

Encountering memory errors in Google Colab? We've all been there. Often, these arise when we're processing large files or hefty datasets. In this section, we'll break down the reasons and offer simple yet effective solutions.

1. Large File Loads: One primary culprit behind memory errors is loading sizeable files entirely into RAM. Instead:

- Text Files: Try reading them line by line. This ensures that only a portion of the file is loaded into memory at any given time.

- CSV Files: Think about partitioning your file and loading it in segments.

2. Machine Learning Model Training: If your dataset comprises images, a high batch size can quickly occupy available RAM. A quick fix? Reduce the batch size. This way, fewer images are loaded into memory during each iteration, keeping the RAM usage in check.

While these solutions address some standard scenarios, it's pivotal to note that there could be other specific situations leading to memory errors. However, as a rule of thumb, processing in batches can be a game-changer. It not only efficiently utilizes memory but also ensures smoother operations.

Stay tuned! In our next segment, we'll explore more in-depth about frequent instances where these errors emerge and walk you through code-driven solutions.

How to Address the Memory Error in Google Colab

In this piece, I'll spotlight some common scenarios that might trigger memory errors in Google Colab and arm you with actionable solutions.

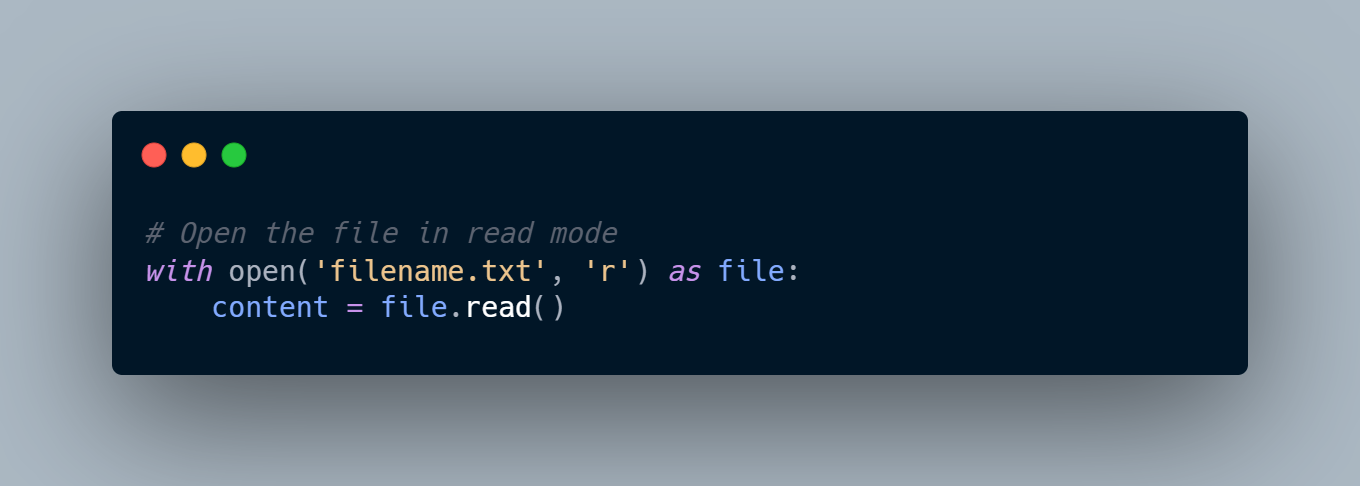

1. Reading Large Text Files

If you've stumbled upon this error while trying to read an extensive .txt file, you're not alone. A common oversight is to read the entire file in one go, like so:

with open('large_file.txt', 'r') as file:

content = file.read()

This approach loads the complete text file into RAM. If the file's size surpasses the RAM's capacity, Google Colab is bound to crash.

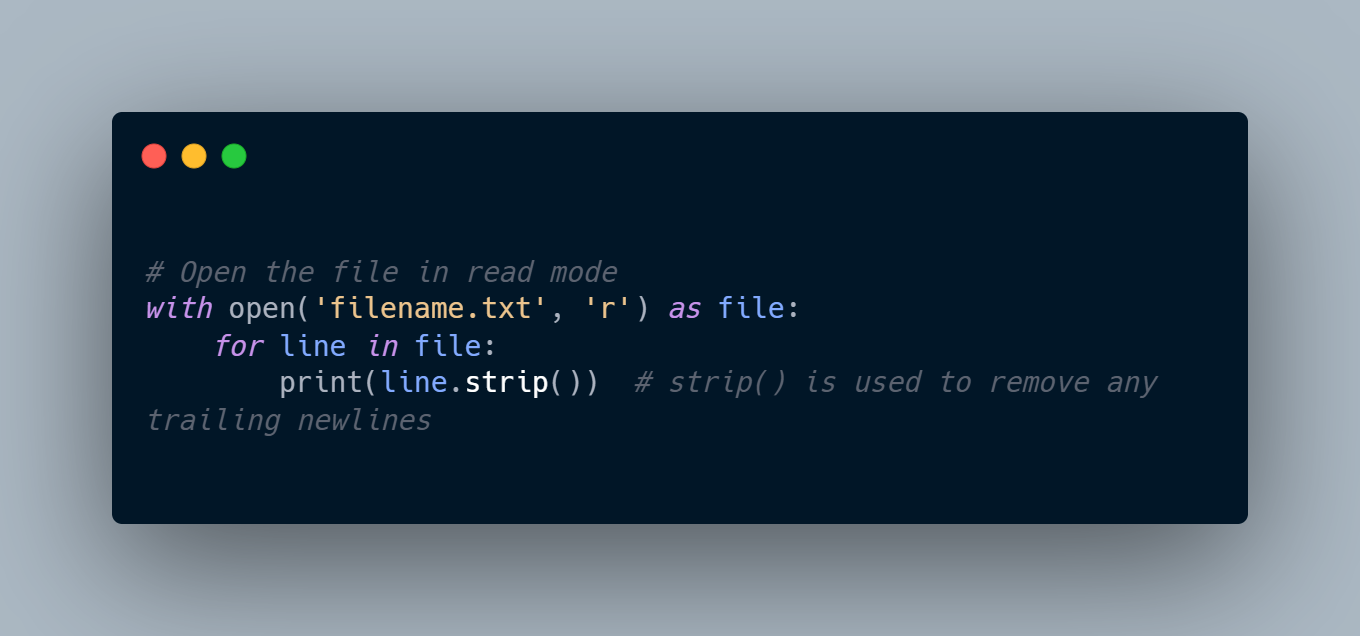

Solution: Instead of reading the entire file all at once, you can opt to read it line by line:

with open('large_file.txt', 'r') as file:

for line in file:

# Process the line or store it as needed

This method ensures that only a fragment of the file is in memory at any moment, considerably reducing the risk of memory overload.

In subsequent sections, I will delve into other frequent triggers of this error in Google Colab and elucidate the remedies, complete with sample code.

Addressing Memory Errors When Training CNN Image Models in Google Colab

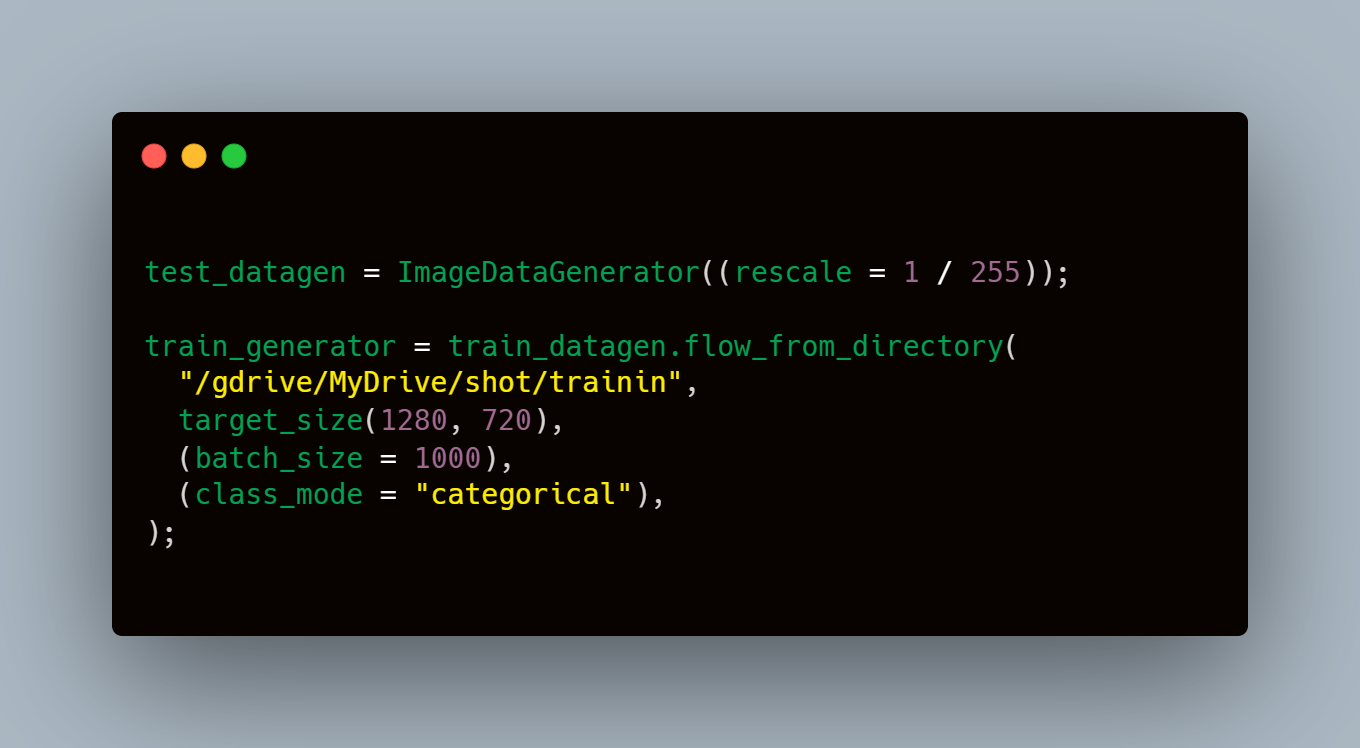

If you're encountering memory issues while training a Convolutional Neural Network (CNN) image model, you're in the right place. More often than not, this stems from an oversized batch size.

Understanding the Issue: Consider the batch size as the number of images loaded into RAM for each training iteration. For instance, if your batch size is set to 1000, this means 1000 images are loaded into RAM at once. If your dataset is dense with high-resolution images or if your RAM is limited, this can quickly lead to a crash.

Solution: Adjusting the batch size. But the question is - to what value?

Trial-and-Error with Batch Size: Arriving at the optimal batch size is more of an art, perfected through experimentation. Here's a strategy:

batch_size = 256

batch_size = 128

batch_size = 64Ultimately, the ideal batch size will vary for everyone. It's contingent upon your dataset's characteristics and the available RAM. With a little patience and experimentation, you'll find the sweet spot that ensures efficient training without memory hiccups.

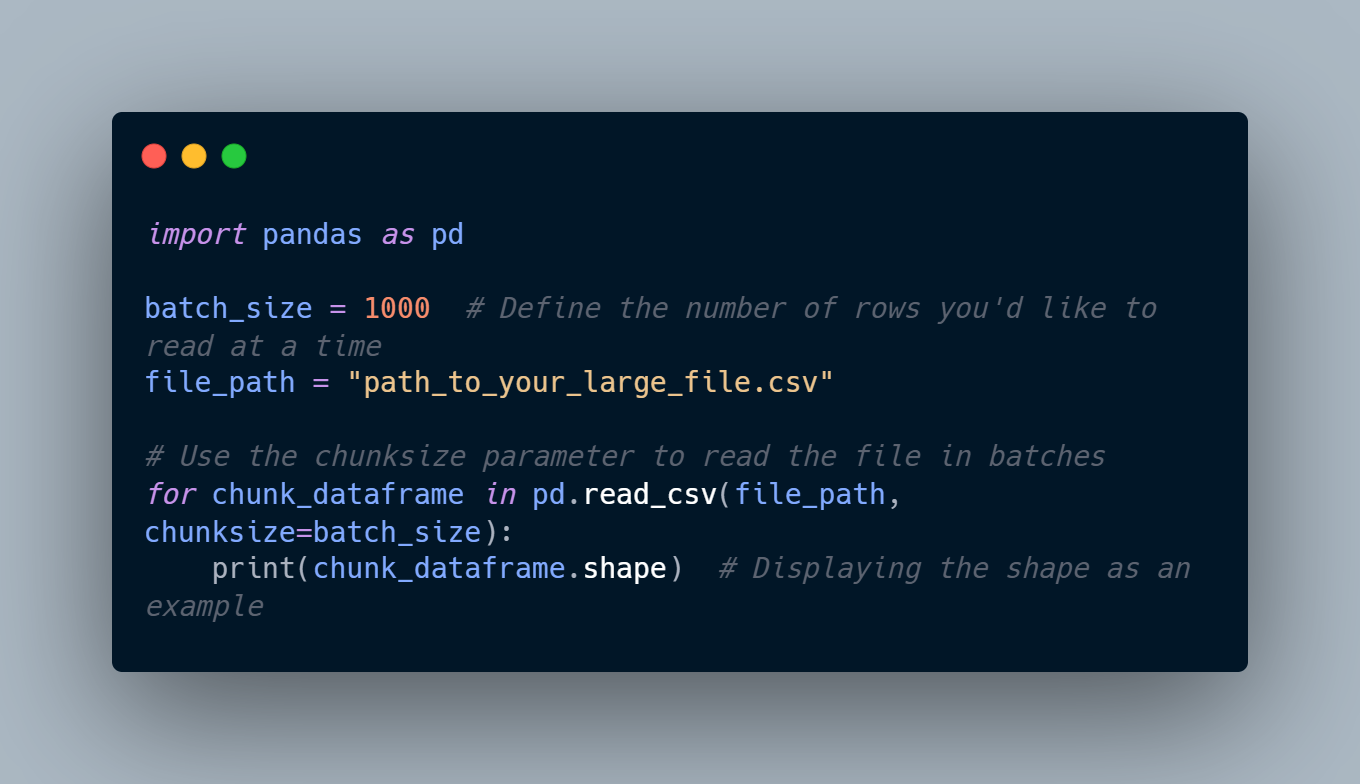

Handling Large CSV Files in Google Colab with Pandas

Ever faced an error while trying to read a colossal CSV file using Pandas in Google Colab? It's a common conundrum. Fortunately, Pandas offers a robust solution: reading CSV files in batches.

Why Batches? By reading files in chunks, or "batches", you can manage your RAM usage effectively, processing only a specific number of rows at any given time. This prevents the file from overwhelming the system's memory.

Let's Dive into the Code:

import pandas as pd

batch_size = 1000 # Define the number of rows you'd like to read at a time

file_path = "path_to_your_large_file.csv"

# Use the chunksize parameter to read the file in batches

for chunk_dataframe in pd.read_csv(file_path, chunksize=batch_size):

print(chunk_dataframe.shape) # Displaying the shape as an example

In this example, the chunksize parameter dictates the number of rows to be read into the DataFrame with each iteration. Once loaded, you can treat chunk_dataframe as any regular DataFrame and apply familiar Pandas operations on it.

👽 A Quick Note: While the above method tackles a widespread scenario, it's not exhaustive. If your specific situation isn't described here, don't hesitate to share it in the comments. I monitor comments daily, ensuring you'll receive a solution or guidance within 24 hours post-commenting.

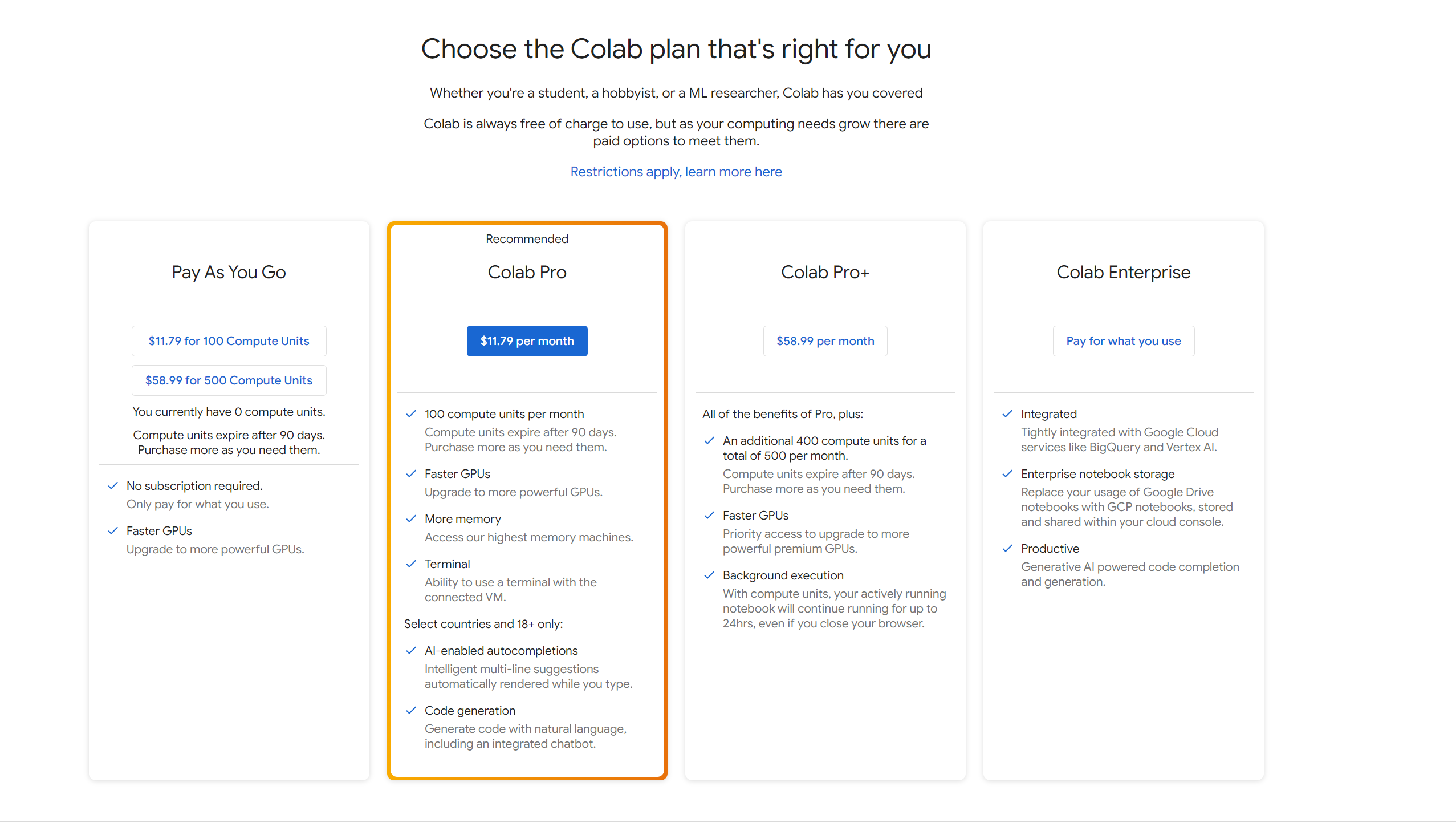

Upgrading to Google Colab Pro: A Seamless Solution

While the methods mentioned above are free and effective ways to manage large datasets and intensive computations on Google Colab, there's an even more straightforward resolution for those who can allocate a budget: upgrading to Google Colab Pro.

What's the Advantage?

With the Pro version, Google Colab substantially enhances your RAM and GPU limits. This means:

- Larger Datasets: Process more significant datasets without fretting about memory constraints.

- Faster Computation: Enjoy swifter execution times with access to better GPUs.

- Extended Idle Times: Work with longer idle times before your session gets disconnected.

For many, the upgrade can mean running their existing code seamlessly, without the need for chunking, batch adjustments, or other modifications.

How to Upgrade?

Visit the Google Colab website, and you'll find an option to transition to the Pro plan. The monthly fee offers substantial benefits, especially for those who frequently use Colab for heavy tasks.

🌟 In Conclusion: While free solutions often require tweaking and adaptations, opting for Google Colab Pro can simplify your workflow and elevate your computing capabilities. Think of it as an investment into smoother, faster, and more efficient data processing and machine learning operations.

![[Solved] ZlibError:zlib:

unexpected end of file - payload](/content/images/size/w600/2024/02/Screenshot-2024-02-18-143905.png)